Oops!

404 error

We can't seem to find that page.

Cybersecurity needs everyone

Cybersecurity needs everyone

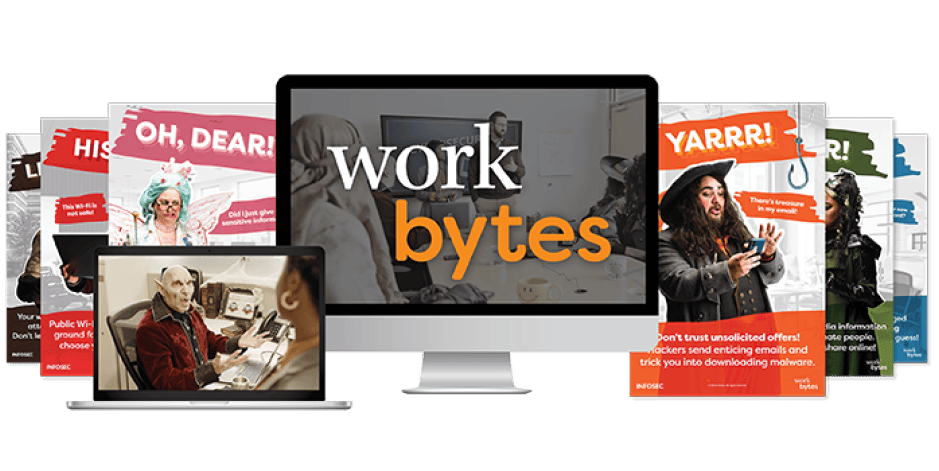

Launch the Work Bytes security awareness training today!

Award-winning training you can trust

What our clients say

"Infosec Skills is a very good place for building technical acumen and engineering development knowledge. It enables us to provide training to the entire team on relevant topics."

Romy Ricafort

West Division Senior Director of Sales Engineering