Introduction to container security

Containers are becoming the new normal to deploy software applications and many organizations are embracing container technologies at a great speed. This article provides an overview of containers and their benefits along with an introduction to the popular container runtime, Docker. We will also discuss Docker alternatives and the need for container orchestration by introducing Kubernetes.

Learn Container Security

Overview of containers

A container is a process that is running on your host and it is isolated from other processes. Containers provide operating level system virtualization for applications to run in a constrained environment. Containers without taking too many resources, can give an illusion that the applications are indeed running on a full blown operating system. This is achieved by utilizing a few Linux features such as namespaces and cgroups, which will be discussed in greater detail later.

Containers are normally created by first packaging the application and its dependencies as an image. Anyone with the image and an appropriate runtime can launch the application locally as a container thus providing us the ability to easily share applications without worrying about their dependencies and application runtime. Everything required to run the application will be available within the container.

When an application is deployed on a physical server, we may underutilize the resources available on the physical server, while the cost of purchasing and maintaining a physical server is also something substantial. When a physical server is purchased, running the applications in virtual machines is also an option. So, let us discuss some of the differences between running applications in virtual machines and containers.

Virtual machines vs containers

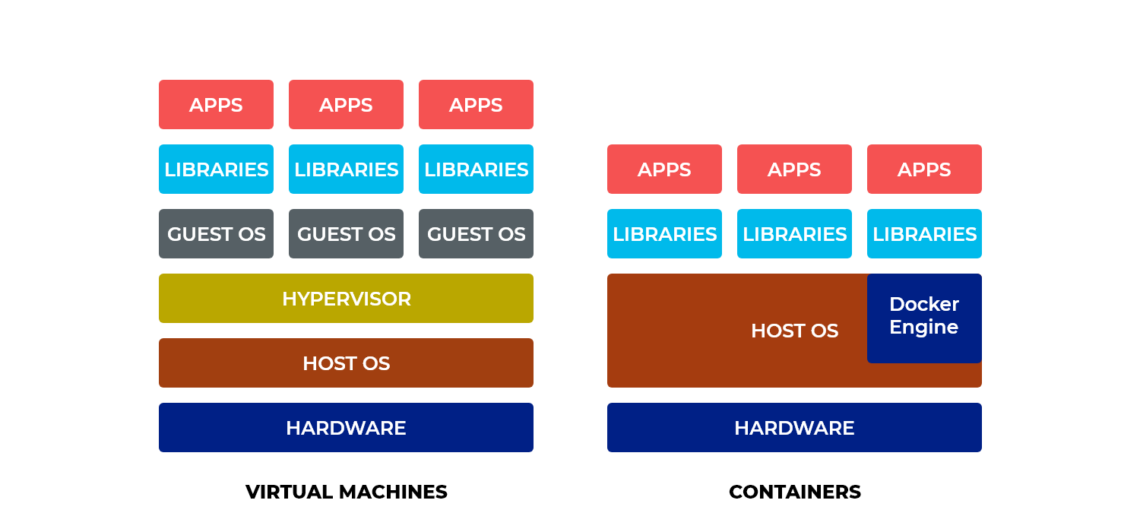

Virtualization makes use of hypervisors to create separate operating system environments. Each virtual machine acts as a separate computer and it will have its own operating system. When a virtual machine is used to deploy an application, the full OS resources may not be used and it is also required to configure hardware resources such as RAM, CPU and harddisk.

Whereas containers make use of the host operating system’s Linux kernel, which means containers do not need a separate operating system to run on. They can make use of your host machine’s Linux kernel.

As you can see in the figure, Virtual machines on the left have hardware to start with, on top of which we have the host operating system. There is a hypervisor on top of the operating system. On top of the operating system, there are different virtual machines running and each Virtual machine has a guest operating system running inside. Since each Virtual machine contains a separate operating system, it makes the virtual machine’s size gigantic. As the number of virtual machines increases, more resources are required.

In contrast if you take a look at the containers in the preceding image, there is a host operating system running on the hardware and a software called Docker engine (the container runtime) is installed on the host operating system to act as a layer between the host operating system and the containers.

If you observe the containers, they do not have a separate operating system like what we have with virtual machines, which makes containers much lighter than virtual machines. This is because they use the Linux kernel of the underlying host and a root file system will be available within each container giving a feel of running a separate operating system. The following excerpt shows how a docker container contains a root file system, which looks similar to that of a host.

/ #

/ #

/ # ls

bin etc lib mnt proc run srv tmp var

dev home media opt root sbin sys usr

/ #This is one of the notable differences between Virtual machines and containers. In addition to the root file system, each container will have its own CPU, RAM, devices, processes and network stack.

As mentioned earlier, one big advantage of containers is the size. This is possible because containers do not need to use a separate operating system for each container. We can have any Linux based operating system as the host operating system and we will still be able to layer other operating systems on top of the host. For example let’s assume that the host operating system is Ubuntu.

Regardless of what operating system is running on the host, we can have containers running with Centos, Red Hat etc. In addition to it, since all the application resources are bundled into one package, spinning up an application container from the image is much faster than spinning up a virtual machine.

Docker vs rkt and other container runtimes

Docker is the most popular container runtime to an extent that many people think Docker is the only container runtime available. The concept of containers is not new and it existed even before Docker came to its existence. Chroot can be thought of as a type of container. All the containers use a similar concept, by virtualizing the operating system resources.

However, when namespaces and cgroups features were added to Linux kernel in version 2.6.x, which was released in 2003; it allowed users to launch isolated processes, leading to the introduction of true containers we are seeing today.

Linux Containers (LXC)

Linux Containers (LXC), which was initially released in 2008 is a userland container manager that launches an OS init in the namespace so you get a standard multi process OS environment like a VM. LXC containers can be thought of as lightweight virtual machines and we can use them as lightweight alternatives to virtual machines with blown operating system.

The following excerpt shows a LXC container running on a Ubuntu Virtual Machine.

root@growing-termite:~#

root@growing-termite:~#

root@growing-termite:~# id

uid=0(root) gid=0(root) groups=0(root)

root@growing-termite:~#

root@growing-termite:~# ls /

bin boot dev etc home lib lib64 media mnt opt proc root run sbin snap srv sys tmp usr var

root@growing-termite:~#Docker

Docker is the most widely used containerization software. It uses Docker Engine as it’s container runtime. Early versions of Docker used LXC as the container execution driver, though LXC was made optional in v0.9 and support was dropped in Docker v1.10. Docker changed the world of containers by making it very easy to package, share and deploy containers. It comes with docker command line client, which interacts with a daemon running on the host. Using docker cli, we can build, run, stop and delete containers.

Docker can be run on all the popular operating systems today, which includes Linux, Windows and Mac. The following excerpt shows a Docker container running on a Ubuntu Virtual Machine.

/ #

/ #

/ # ls

bin etc lib mnt proc run srv tmp var

dev home media opt root sbin sys usr

/ #rkt

rkt (pronounced like a "rocket") is a CLI for running application containers on Linux. It is built from the ground up with security in mind. While Docker runs as a daemon, Rkt doesnt. Instead, it runs as a static binary. Rkt doesn’t support Windows. It should be noted that Rkt supports spinning up containers using Docker images.

The following excerpt shows a rkt container running on a Ubuntu Virtual Machine.

run: group "rkt" not found, will use default gid when rendering images

stage1: warning: error setting journal ACLs, you'll need root to read the pod journal: group "rkt" not found

/ # id

uid=0(root) gid=0(root)

/ #

/ #Note, how are running rkt as a static binary and we are using a docker image named alpine.

Docker vs Kubernetes

Docker provides a way to containerize the applications and run them. Let us assume that an organization has an application with 25 microservices, each requiring one docker container. If we choose to use Docker CLI, we will have to manually run docker run command, multiple times to bring up all the microservices. One way to solve this problem is to use docker-compose.

Let us assume that 5 of the microservices require to run on a host with GPU capacity. When there is a demand, we may also need to run 5 additional copies of the entire application automatically. Similarly, when there is less demand, we may want to remove some instances of the application. To solve all these complex problems, we will need some framework. Kubernetes does exactly that. It is a container orchestration framework that can perform auto scaling of deployed containers, decide the best node to deploy a container, support multiple container runtimes and the list goes on.

In simple words, Docker allows us to run containers by acting as a container runtime, whereas Kubernetes helps us to manage these containers.

Learn Container Security

Conclusion

This article has provided an overview of containers. We discussed a brief history of containers and some alternatives to Docker. We have seen how the differences among Virtual Machines, Linux Containers (multi process containers such as LXC), Application Containers(Single process containers such as Docker).

We then discussed why a container orchestration framework like Kubernetes is needed to manage complex applications built as containers.

Sources

https://www.ctl.io/developers/blog/post/what-is-rocket-and-how-its-different-than-docker/

https://www.openshift.com/learn/topics/rkt

https://rocket.readthedocs.io/en/latest/Documentation/trying-out-rkt/