The Evolutionary Approach to Defense

By: Philip Nowak

The evolutionary approach to IT security seems to be the most natural and efficient way to resist cyber-attacks. The Red Queen Effect describes the relationship between the attacker and the defender - the never-ending story of cyber battles, but can we minimize the 'mean time to know' and respond on time to any security intrusion? Integrated solutions, collaboration, and 'shiny toys' are still not enough - presented SIEM-based incident response methodology and intrusion life-cycle can bring relief to any computer security incident handler, and help those, who struggle with SIEM deployment and incident response process. Having seen the Kill Chain's feedback loop and framework itself, it is time to combine known practices and use them in the corporation environments to create a more active and defensive security posture.

What you will learn:

- How to combine the intrusion chain with SIEM

- How to understand SIEM detection capabilities

- What is correlation chain

- Get to know sample framework

What you should now:

- Generic incident response process

- The intrusion chain

- Detection methods

THE STORY

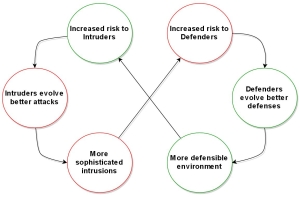

The best example of the Red Queen Effect, is the "arms race" between predator and prey, where predator tries to increase the threat to prey only by developing better attacks, which results in a better defense developed by the pray. This is an evolutionary hypothesis, which presents the knowledge that can be summarized in one sentence "You evolved, but your competitor has also grown - if you do not move, you fall behind". Referring to Bruce Schneider work, the Red Queen Effect concept is presented on the below picture and mapped to the IT Security world. How many times have we heard about new technology that would deliver perfect protection? Personally, I love the tech, and yes, it is a fact that those 'shiny' solutions work and give better protection; however, the attacker is also evolving. Therefore, in best case scenario, the adversary and defender maintain the status quo. In this paper I would like to present the process and tools required to map the intrusion chain with SIEM system. This will give us - defenders - precious time for incident response, and a continuous opportunity to develop detection capabilities – eventually it will allow us not to fall behind.

Figure 1. Cyber arms race

INTRODUCTION

During my lectures I am always asked what is the understanding of SIEM systems and SIEM-based incident response process. A lot people immediately grasp the idea of data aggregation, correlation, compliance, network forensics, etc. How about integration and detection capabilities?

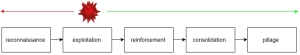

RE-ACTIVE APPROACH

There are many legacy components such as firewalls, intrusion detection and prevention systems, anti- malware structures, AVs, proxy gateways, and data leak prevention mechanism - all of that added to SIEM and its control monitoring capabilities. The above present the blocking-filtering-denying capabilities (Richard Bejtlich, "The practice of network security monitoring") and that is it! If we see tons of firewall denies from remote host scanning of our internet-facing servers, we know that this is a potential reconnaissance and it was stopped!

This action might be followed by a shell injection attack - detected and denied by IPS, or just 'command and control' communication spotted by the next-generation threat protection system and filtered out by proxy. The f all of preventions mechanism is inevitable - the real question is: can the detection process of intrusion stop it before the adversaries achieve their goals?

In another words, SIEM follows and controls prevention and detection mechanisms and their effectiveness, and shows where the prevention fails, exhibiting serious holes in our defense lines. At the level of log-based correlation and rules, we talk about SIEMs, which simply control and follow. Watching this process of indicators' detection from our security systems (which reports block - filter - deny alerts), we have the time to act in a proper manner, and make it before that attacker accomplishes his ultimate plan.

TRUE MONITORING

As the SIEM solutions are progressively extending their capabilities (passive traffic monitoring, flows aggregation, vulnerability scanning ... ) and present not only log/event management features (control and follow) I find SIEM very useful, when it comes to threat hunting, profiling, or network traffic assessment. Having said that, I would not go and claim that a SIEM system is only a control measure for detection/prevention mechanisms, but it also gives great potential to proactive investigation, advanced correlation, and traffic monitoring.

PRO-ACTIVE MONITORING

Session data, application layer information, vulnerability records, log and flow reports give a powerful and wide spectrum for threat hunting and security assessments - monitoring or observation activities. The critical thing when dealing with hunting is the knowledge of the territory – you cannot be a good hunter or defender if you do not know the battlefield. Having presented this analogy, I would strongly suggest preparing good network hierarchy and maintaining it, in order to give the most up-do-date plan and data source distribution. It is very important, or even required, to know where the sensors are (session data or full content data) and what is the day-to-day traffic flow. The knowledge of log sources is also essential, as it supports the transition between one data type to another – a powerful trick when it comes to investigation. When dealing with hunting, do not forget about the intrusion chain and its reconstruction.

CORRELATION

There are a lot of interesting types of event correlation approaches. For instance, we have graph-based, neural network-based, vulnerability-based, route-based, and finally rule-based correlation. When it comes to SIEM, usually, there is a set of rules which follows the pattern: condition - action. That very intuitive mechanism can bring you a lot of different security rules, metrics and reports in a short time; now the question becomes, how to maintain rules and how to construct them in the most efficient way?

VISUALIZATION

During SIEM-based investigation sometimes I find myself in situation saying: why is there is no visualization? Of course some SIEMs deliver basic graphs, pie-charts or timelines but this is only statistical portion of analysis. Imagine having the flow or session data visualization - a graph with each node presenting system and each edge showing dependency or conversation between hosts.

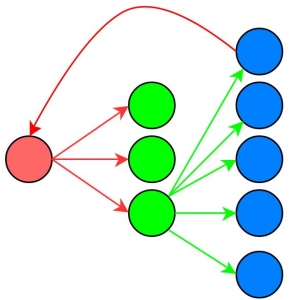

Figure 2. Vector attack

The security visualization is expanding its potential, but I have not seen system that could present the vector attack, or timeline attack analysis. On the other hand, statistical graphs really help, and presents a good level of detail. Flows visualization gives a tool for searching for the proverbial needle in the haystack.

Each SIEM solution has its correlation mechanism which integrates many different data sources. The most critical part is when we reach threat detection and situational awareness. We want to have the most actual and accurate information. According to definition from 'Logging and Log Management' (Dr. Anton A. Chuvakin, Kevin J. Schmidt, Christopher Philips) the 'Correlation is the act of matching a single normalized piece of data, or a series of pieces data, for the purpose of taking an action'.

I will be using the 'correlation' term to describe the process for both advanced normalization, and pure correlation - as in the normalization process, the processing mechanism also takes part in correlation.

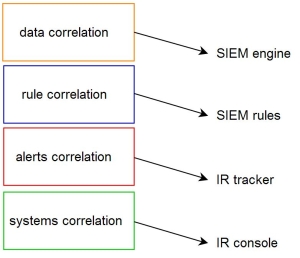

The 'data correlation' is the lowest level of correlation responsible for making the feeds 'smarter', in which events, and sets of them, are given specific priority, classification, and categorization. I call it 'data correlation' as the 'data' is usually perceived as the most raw feed in the correlation chain - presented in Figure 3. This is not a simple normalization based solely on parsing to common format, but it also relies on special adjustments and configuration on the level of correlation engine (examples: logs from 'more' critical servers should have higher priority, when the same event is logged from multiple systems, the 'confidence' factor should be higher). Mechanism watches each log entry, flow, or other feed and assigns additional factors or characteristics to it. At this stage, the system administrator should perform security assessment, check which systems, links, or data sets are more critical and tune the settings accordingly; this in order to strengthen the correlation capabilities.

Figure 3. The correlation chain

The next step of correlation chain process are the SIEM rules. Here you create tests, combination of tests, mix of data types, and anything that might present detection capabilities. Rules can combine logs, flows, and other feeds; thus making it a powerful combination. Do not forget about system performance, and avoid mistakes such as: keyword searches on raw payload. Rule creation mechanism depends strictly on chosen SIEM.

The third level is all about reporting and report tracking. Each dispatched report is forwarded to a ticketing system (according to RTIR software workflow the 'report' is the warning produced by the detection engine and it may be the 'alert' if the analyst decides so: report-> alert-> incident-> investigation). There, the reports are escalated, assigned, or deleted by analysts. As the tracking systems can be designed to correlate, reports are another layer where we can spot that multiple reports have something in common. This can be very useful, and crucial, when it comes to campaign tracking and verifying how the intrusion is evolving over time.

Corporations usually have multiple sites, networks, and assigned responsibilities. If you have several locations and monitoring consoles, it is a perfect opportunity to correlate alerts between sites, and use spotted indicator in one place, to fortify or warn another site. In one 'mother' console, we want to know where the serious threats been spotted, and how can we mitigate them.

In conclusion, always have in mind the following: work on the quality of SIEM feeds, try to correlate data on different levels

(entry, rule and alert), and treat the performance as another priority.

DETECTION

Before any threat can be spotted, detection rules need to be deployed. SIEM gives capabilities of event, flow, alert, anomaly and behavioral rules creation. These are typically scenarios describing particular security incident, breach or policy violation. Very often rules are just built on specific events taken from particular appliances (driven by vendor), which is very ineffective. I suggest and recommend creating scenarios based on multiple different data sources, avoiding quantitative analysis. It is crucial to understand that by integrating information gathered within SIEM, security team is given a unique intelligence from observed environment. Rules should not only be built on specific events, flows etc., but also have a ‘space’ for creativity and research. This is understood as mixing data sources, level of details, time, categorization, physical localization, etc. Do not forget that rules present the static part of intrusion detection and are used to spot the signs of breach or policy violation. The similar approach with data integration can be used when manually hunting a threat - using filters, searches, pivoting, profiles and aggregation. As I noticed before, the rule can be a simple combination of different data types.

We can construct rules in following way:

Let us take a simple example and analyze the 'virus detected' rule in action. If the event from an AV console states that some malicious content was found, the alert is dispatched and the analyst is warned. The standard procedure says that the source of the alert should be found and the workstation remediated. It is as simple as that. But how can we benefit from this kind of generic rules and tuning capabilities?

First of all, generic rules are not bad! Very often I see security teams that switch off all generic tests and try to detect intrusion on their own. This is a wrong approach. Generic rules should be enabled at the very beginning as they have wide coverage of many detection techniques across the intrusion chain model. W hat is more, they give the opportunity to evolve and be tuned in line with any circumstances. The third argument is that generic rules are efficient and easy to craft.

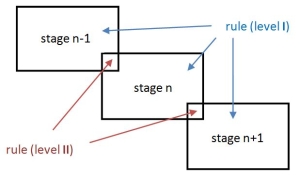

Basically, when creating rules, we try to find specific patterns and indicators that might present some kind of suspicious activity. SIEM has capabilities to use rules, which describe scenarios such as 'if activity A is followed by activity B then alert'. A Classic example presents the 'successful network logon after multiple authentication failures attempts'. This type of detection describes suspicious behavior and should be used; here I would like to take one step further, detection rules can be used to cover short intrusion chains. I mean here that every two links from the intrusion chain can be combined together into a detection rule. Construction:

figure_b

Recently I have been investigating intrusion caused by some botnet exploiting PHP vulnerability. The situation could be split into several steps: scan, delivery, exploitation, and C&C communication; this is a classic example. After the incident response (SIEM-based of course!), I listed all events/flows for a particular intrusion and grouped them by category (each event has a tag or a category name assigned) – this is the taxonomy factor when it comes to log normalization.

Access Reconnaissance Exploit

The 'Reconnaissance' link phase followed by 'Exploit' worked perfectly. I knew what was going on, and I could easily gather all evidence from SIEM within minutes. After that, I scoped the incident and checked the affected hosts. Very often, the particular detection rule is too 'loose' and might not be triggered - this is why you really need tuning (and observation part) and various types of the same rule (especially when the test is statistical-based). In similar cases, when I get the attacker’s IP, suspected network, or other indicators I manually check the network looking for similar footprints. After the mitigation and remediation it is so crucial to document findings and get the knowledge from the intrusion chain reconstruction. Try to 'group by' the event category field and check what detection categories have been touched by the intruder, and how can you use it for further development and hardening. The detection rules are neither the beginning nor the end. They are, and will be, a process of tuning and adjustments.

Figure 4. The intrusion chain in SIEM rules

To efficiently use the SIEM capabilities and the intrusion chain model, my idea is to write rules which combine two contiguous links within a chain – this is depicted in Figure 4. I like to call and differentiate rules based on the specific level of detail. The level 1 rules should be deployed across each link in the chain - make sure that you cover as wide spectrum of scenarios as possible. The level 2 rules combine detection tests from adjacent links. Examples: scan followed by exploitation, new process started followed by an outbound connection blocked.

Proper correlation and detection rules are the first steps to start the intrusion chain model and SIEM integration. You need alert tracking software to correlate multiple reports and recognize intrusions patterns.

Ticketing systems allows for automatic knowledge base creation and maintenance of intelligence records on historic indicators, which might be spotted in the following alert. Tools and rules are useless if you do not know how to use them together and benefit from the SIEM-based incident response. The following section will present sample framework and recommendations.

FRAMEWORK

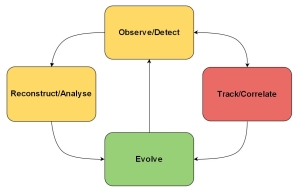

When working in SOCs, I have noticed that the high-level workflow for SIEM threat detection and incident response may be depicted like the one in the picture below. There is no start or end in the workflow, this is an ongoing process.

Figure 5. Sample SIEM IR workflow

Starting from Detect/Observe point - as it is the most natural - I would like to divide the 'detection' phase into two categories. First, that is rules- and statistics- based detection (reactive). The alert is triggered here by full or partial match with the detection rule. The second one, I call 'the observation' part (proactive). Personally, I suggest using the manual log review methods, traffic assessments, profiling and routine threat hunting. This proactive approach can be very beneficial and presents potential security intrusions (undetected by alert data), security holes, and missing detection rules.

Figure 6. The intrusion chain model

Following the detection phase is the incident response part. I use the intrusion chain model proposed by Richard Bejtlich, as it is the most generic and can be adjusted to any security breach (each stage can be divided into several more specialized links). Having that in mind, I think that the critical part of the analysis is to reconstruct the intrusion chain. The analyst should create the timeline analysis and document how the intrusion proceeded, where it was first spotted, how the detection/prevention systems responded, and what countermeasures should be taken.

The output from reconstruction phase must be utilized, if not, the whole detection-response workflow is wasted. I believe that each detected and observed indicator must be analyzed and used, so that detection capabilities are leveraged and the whole system can evolve to prevent new threats and mitigate those old ones. Important points to be mentioned in the 'evolve' phase are: tune your rules to detect the intrusion as early as possible, use reconstruction phase for fixing your configuration/vulnerabilities issues, and create new rules for newly discovered indicators.

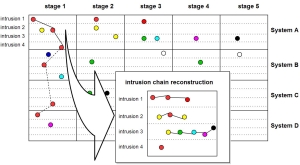

TRACKING

I placed the 'track' phase in the workflow, and I think that this step is critical for the entire detection – response process. SIEM can be combined with a ticketing system and automatically track all alerts generated by the correlation engine. This is beneficial, as SIEM analysts can follow intrusions, see how the attack is progressing, filter out false-positives, apply additional layer of correlation, and check if there are any similarities between alerts (or reports) or matching indicators. I find it great, as there are no processes or standalone systems (or even SIEM itself) that can update the 'lesson learned' documentation part and remember all previous alerts; here, the tracking system does it automatically.

Usually I meet with the situation where SIEM analysts manually create report in the tracking software. W hen the alert is triggered in SIEM console, they analyze the case, fill the incident template, and save the form in database for further investigation and documentation. There is no correlation between cases, reports are not updated, and manual reviews are time consuming - and that is why they are considered useless. In such situation, it is impossible to track the intrusions, spot the campaigns, and most of all evolve.

Figure 7. Tracking intrusions

In Figure 7, I am presenting alerts (colored dots) generated and correlated in many different sites (System A,B,C,D). Each system has its own ticketing tool, which is connected to the SIEM and tracks all alerts generated by the correlation engine. As we know, each attack can be divided into stages – here we have five stages. The system looks for similarities between alerts and groups them into intrusions. Analyst looking into the system, can find which alerts have been correlated together and if there were any similar indicators between intrusions. If yes, then those intrusions are marked and grouped together creating campaigns. The picture shows that red-indicator have been spotted in the Stage-1 in 4 different sites – possible botnet scanning campaign.

If you have a ticketing system (TS), most likely you can adjust it and connect with a SIEM. Check the API of both the tracking

tool and SIEM. Verify how to forward alerts from SIEM and how to deliver many details. On the basis of that, try to connect alerts in TS comparing fields such as IP, localization, categories, etc. Even if the generated alert is a false-positive, or not actionable, you should to know it; tune it out and see the reports in TS. In the following paragraph I am summarizing some of the most important takeaways.

GUIDELINES

Five steps to map the intrusion chain model with SIEM-based incident response.

- Map each data type with category tag.

- Go from generic tests toward more strict and specific detection rules. Use a gradual development and use different variations for the same test.

- Prepare detection rules for each link within intrusion chain – level 1 and level 2 rules.

- Establish connectivity between SIEM and the ticketing system (IR tracking system).

- Use the reconstruction-observation phases and develop your system and network on the basis of the discovered or observed IOC.

Five steps to make SIEM detection easier.

- Perform risk assessment before tuning phase.

- Create reports for log and flow activity; the starting point for observation. Reduce noise.

- Maintain network hierarchy.

- Care about data quality and system performance.

- Evolve and learn after each alert.

CONCLUSIONS

There are several motivations behind this article. First of all, I believe that the technology cannot substitute for experienced analysts with good processes and methodologies. Sophisticated security appliances, basic rules, and reports tend to generate tons of false positives; in the meantime the serious threats remain undetected and evade those techniques . This is why the observation phase followed by the reconstruction stage should be deployed in the SIEM incident response process and used every time the IOC is detected.

I have presented how to map the intrusion kill chain with SIEM, how to construct efficient rules, and how I understand the correlation chain. It is beneficial to have the ticketing system in play, as it tremendously improves security posture. The tracking phase can reveal campaigns, present the same indicators within different alerts, and speed -up the tuning. The presented SIEM-based detection-response framework focuses on the sub-process of evolution, which is described as the ongoing action with constant tuning, research, and analysis. This paper ends with several guidelines and a summary.

What should you learn next?

About the author: Filip Nowak works as IT Security researcher and incident response analyst. Working for several Fortune 500 companies, is responsible for deploying SIEMs and effective security response processes. His work connects detecting and mitigating corporate intrusions, as well as conducting research in threat hunting approach with integrated solutions. At the same time author extends his knowledge in digital archeology, and forensics investigations. Highly motivated, passionate about security. The Article comes from eForensics Magazine OPEN to see their latest issue.