Web application testing with Arachni

What is Arachni?

In very simple terms, Arachni is a tool that allows you to assess the security of web applications.

In less simple terms, Arachni is a high-performance, modular, Open Source Web Application Security Scanner Framework.

It is a system which started out as an educational exercise and as a way to perform specific security tests against a web application in order to identify, classify and log issues of security interest.

It has now evolved into an infrastructure which can reliably perform any sort of WebApp related security audit and general data scraping.

Why is it important?

No idea....it may not be; however, since the developer (me) is the one writing this article it's really hard to stay objective, especially when I've got to make this interesting.

The most important terms in the previous description are "high-performance" and "modular".

Scanning websites can be extremely time consuming, so it is an absolute requirement that you manage your time carefully, make the most of it and find the sweet spot between performance and accuracy.

Having a very fast scanner means that you're half the way there.

Let me provide some context:

Scan-times can range between a few hours to a couple of weeks – maybe even more. This means that wasted time can easily pile up, even when we're talking about mere milliseconds per request/response.

On the flip-side, the more data you have about specific behavioral patterns, the more accurate your assessment will turn out to be. And the way to get more data is to perform more requests but more requests means more time.

You can see why this can become troublesome, there are two objectives that need to be accomplished and they are mutually opposed.

Arachni benefits from great network performance due to its asynchronous HTTP request/response model. In this case – and from a high-level perspective –, asynchronous I/O means that you can schedule operations in such a way that they appear like they're happening at the same time, which in turn means higher efficiency and better bandwidth utilization.

The second objective, accuracy, depends on the testing techniques of a given module. Sometimes it is a hit-and-miss situation, other times it's a definitive result and in rare cases there's only a hint of an issue.

Due to the fact that Arachni is written in Ruby and provides a very simple and extensive API for modules and plug-ins, contributors can add more tests easily or even port entire applications to be housed under the framework, as a plug-in.

That is why Arachni can be considered an important system.

It provides a high-performance environment for the tests that need to be executed while making adding new tests very easy.

"Well duh!" you may say, but there are not a lot of other Open Source systems that have both of these traits.

The above, combined with the fact that it's the only Open Source system of its kind with such distributed deployment options makes Arachni the first and only system that pretty much has the whole package – I think....

Overview

This section will sound like cheap marketing talk and will be a copy-paste from Arachni's website, and there's a good reason for that.

A technical overview of the components that form the framework can fill a book, or two, so I'm forced to only give you the skinny; if you want to delve into the more technical aspects of the system take a look at some blog posts or the Wiki.

Here it goes:

Automation

Arachni is a fully automated system which tries to enforce the fire and forget principle.

As soon as a scan is started it will not bother you for anything nor require further user interaction.

Upon completion, the scan results will be saved in a file which you can later convert to several different formats (HTML, Plain Text, XML, etc.).

Performance

In order to maximize bandwidth utilization and get the most bang for the buck (an unfortunate choice of words since Arachni is free) the system uses asynchronous HTTP requests.

Thus, you can rest assured that the scan will be as fast as possible and performance will only be limited by your or the audited server's physical resources.

Intelligence

Arachni uses various techniques to compensate for the widely heterogeneous environment of web applications.

This includes a combination of widely deployed techniques (taint-analysis, fuzzing, differential analysis, timing/delay attacks) along with novel technologies (rDiff analysis, modular meta-analysis) developed specifically for the framework.

This allows the system to make highly informed decisions using a variety of different inputs; a process which diminishes false positives and even uses them to provide human-like insights into the inner workings of web applications.

Trainer

The Trainer is what enables Arachni to learn from the scan it performs and incorporate that knowledge, on the fly, for the duration of the audit.

Arachni is aware of which requests are more likely to uncover new elements or attack vectors and adapts itself accordingly.

Also, components have the ability to individually force the Framework to learn from the HTTP responses they are going to induce thus improving the chance of uncovering a hidden vector that would appear as a result of their probing.

Modularity

One of the biggest advantages of Arachni is its highly modular nature. The framework can be extended indefinitely by the addition of components like path extractors, modules, plug-ins, or even user interfaces.

Arachni is not only meant to serve as a security scanner but also as a platform for any sort of black box testing or data scraping; full-fledged applications can be converted into framework plug-ins so as to take advantage of the framework's power and resources.

Its flexibility goes so far as to enable system components (like plug-ins) to create their own component types and reap the benefits of a modular design as well.

Modules

Arachni has over 40 audit (active) and recon (passive) modules which identify and log entities of security and informational interest.

These entities range from serious vulnerabilities (code injection, XSS, SQL injection and many more) to simple data scrapping (e-mail addresses, client-side code comments, etc.).

The difference between the two is that audit modules probe the website via vectors like forms, links, cookies and headers.

While recon modules look for things without the need to actively interact with the web application.

For example:

An XSS module would be an audit module because it needs to send input to the web application and evaluate the output.

A module that looks for common directories, like "admin", is a recon module because it does not interact with the web application. The same applies for a module that scans the web application's pages for visible e-mail addresses.

The difference between the two types is purely behavioral, technically they are the same and they share the same API.

A full list of modules can be found at: http://arachni.segfault.gr/overview/modules

Plug-ins

Arachni offers plug-ins to help automate several tasks ranging from logging-in to a web application to performing high-level meta-analysis by cross-referencing scan results with a large number of environmental data.

Unlike modules and reports, plug-ins are framework demi-gods. Each plug-in is passed the instance of the running framework to do with it what it pleases.

Via the framework they have access to all Arachni subsystems and can alter or extend Arachni's behavior on the fly.

Plug-ins run in parallel to the framework and are executed right before the scan process starts.

A full list of plug-ins can be found at: http://arachni.segfault.gr/overview/plugins

Reports

Report components allow you to format and/or export scan results in a desired format or fashion.

If the existing reports (HTML, Plain Text, XML, etc.) don't fulfill your needs it is very easy to create one that suits you.

There are no restrictions as to their exact behavior; meaning that anyone can develop a report component that saves the scan results to a database, transmits them over the wire and many more.

A full list of reports can be found at: http://arachni.segfault.gr/overview/reports

Flexible deployment

The system allows for multiple deployment options ranging from a simple single-user single-scan command line interface to multi-user multiple/parallel-scan distributed deployment utilizing server pools.

Control of distributed deployments is achieved using XMLRPC in an effort to increase interoperability and cross-platform compliance.

In simple terms, this means that you can go from performing simple command-line scans like:

$ arachni http://my.site.com/

to just as easily build scanner clusters in a couple of minutes via the WebUI.

Installation

Installation instructions for the latest version reside in http://arachni.segfault.gr/latest.

There are three installation options each with its own merits.

Self-contained CDE package for Linux

If you are a Linux user and can't be bothered or don't have enough permissions to install Ruby and further dependencies, the CDE package will allow you to use Arachni out of the box.

Download, extract and run.

Gem

If you already have Ruby 1.9.2 installed you can take advantage of Arachni just by running:

$ gem install arachni

(You will also need the following libraries as well: libxml2-dev libxslt1-dev libcurl4-openssl-dev libsqlite3-dev)

Source

If you want to hack away and modify the code you can clone the Git repository and do a:

rake install

from inside the source directory to install all dependencies and be able to run the scripts under the "bin/" directory.

Usage/Deployment

It's time for the fun part; time to demo some runs of the system and showcase the different interfaces.

Each interface has different features and thus different merits.

Command-line

The command-line interface is the simplest, most tested and most stable.

It provides quick single-user single-scan access to Arachni's facilities.

Help

In order to see everything Arachni has to offer execute:

$ arachni -h

Or visit: https://github.com/Zapotek/arachni/wiki/Command-line-user-interface

Examples

You can simply run Arachni like so:

$ arachni http://test.com

which will load all modules and audit all forms, links and cookies.

In the following example all modules will be run against http://test.com, auditing links/forms/cookies and following subdomains – with verbose output enabled.

The results of the audit will be saved in the the file test.com.afr.

$ arachni -fv http://test.com --report=afr:outfile=test.com.afr

The Arachni Framework Report (.afr) file can later be loaded by Arachni to create a report, like so:

$ arachni --repload=test.com.afr --report=html:outfile=my_report.html

or any other report type as shown by:

$ arachni --lsrep

You can make module loading easier by using wildcards (*) and exclusions (-).

To load all xss modules using a wildcard:

$ arachni http://example.net –mods=xss_*

To load all audit modules using a wildcard:

$ arachni http://example.net –mods=audit*

To exclude only the csrf module:

$ arachni http://example.net –mods=*,-csrf

Or you can mix and match; to run everything but the xss modules:

$ arachni http://example.net –mods=,-xss_*

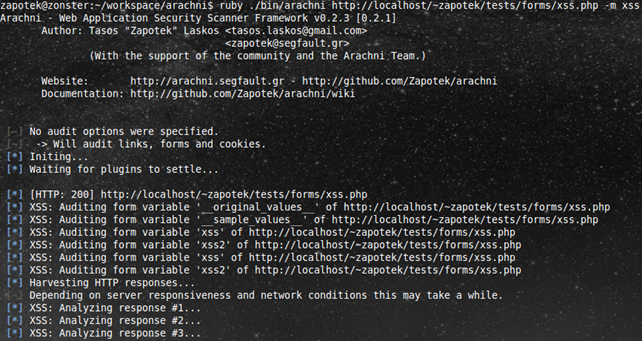

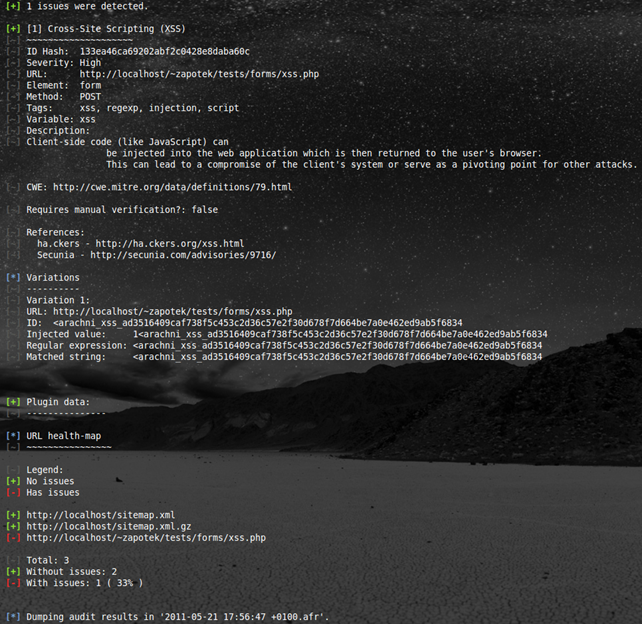

And here are some screenshots for your viewing pleasure:

Starting the scan.

Found a vulnerability.

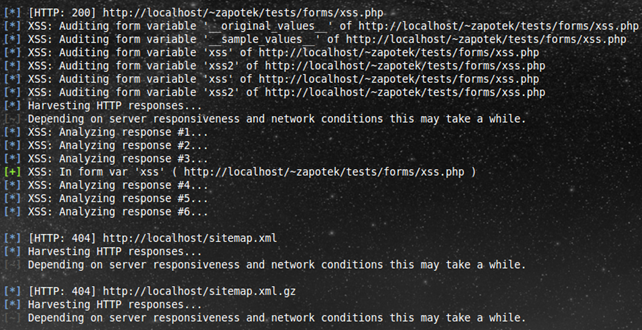

This is what happens when you hit Ctrl-C during a scan.

The juicy part of the standard output (stdout) report.

Web user interface (WebUI)

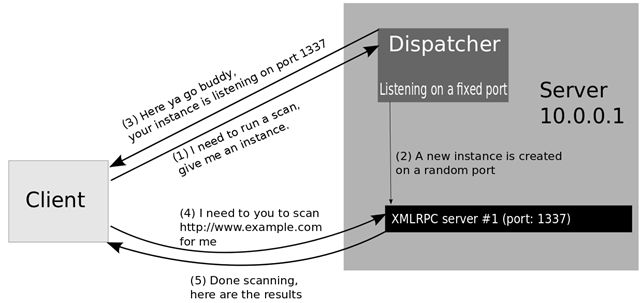

In order to better understand how the WebUI works it's important that you know what happens in the background.

To allow for distributed deployment Arachni uses XMLRPC over SSL to facilitate client-server communications.

Before I start explaining let's first agree on some terminology.

Server instance

An ephemeral scanner instance/agent, it listens on a random port and awaits for instructions.

Its job is to perform a single scan, send the results to the commanding client (the owner) and then die.

It materializes as a system process.

Dispatch server

A server that facilitates spawning and assigning instances to clients.

Upon a dispatch request an instance is poped out of the pool and assigned to the client.

A simplified representation of the dispatch process.

The Web User Interface is basically a Sinatra app which acts as an Arachni XMLRPC client and connects to running XMLRPC Dispatch servers.

It is a way to:

- make working with Arachni easier

- make report management easier

- run and manage multiple scans at the same time (each scan will try its best for maximum bandwidth utilization so it'll be like lions fighting in a cage – make sure you have sufficient resources)

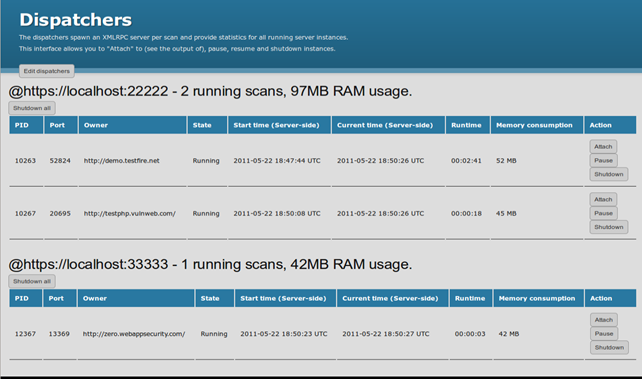

- work with and manage multiple Dispatchers

Each time you start a scan from the WebUI, it connects to a Dispatcher and requests a new server instance.

The WebUI then connects to the new instance and configures it according to user settings.

From that point on, the user can monitor/pause/abort the scan or forget about it and let the WebUI manage everything; once the scan finishes the WebUI will save the report and shut down the instance.

Anyone can essentially create and control a simple grid of Arachni scanners by adding multiple Dispatchers and distributing the work-load accordingly.

Example

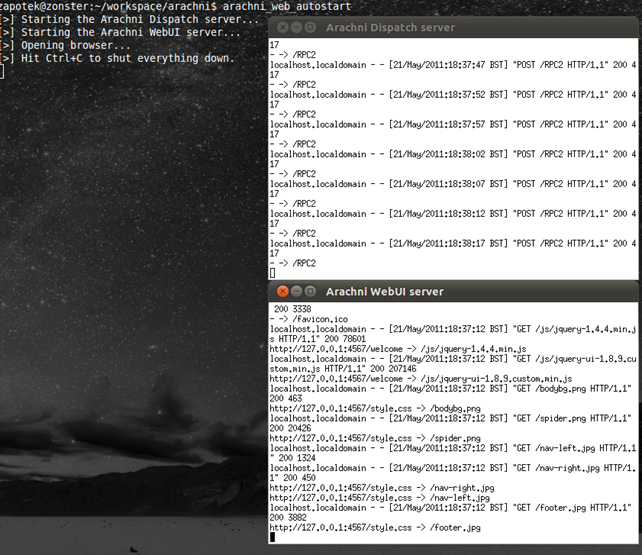

The easy way to start the WebUI is by running the "arachni_web_autostart" script.

This script will setup a Dispatch server, the WebUI and your browser.

Running the autostart script.

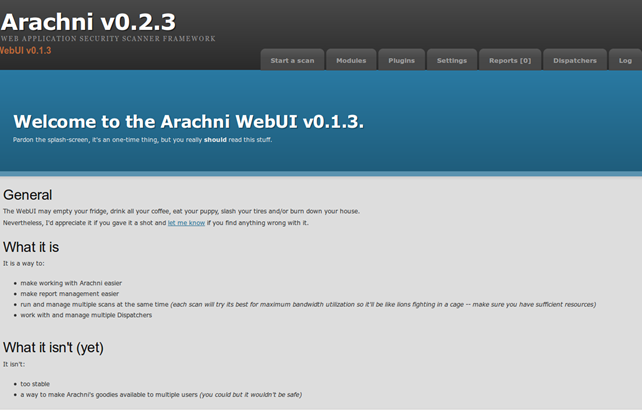

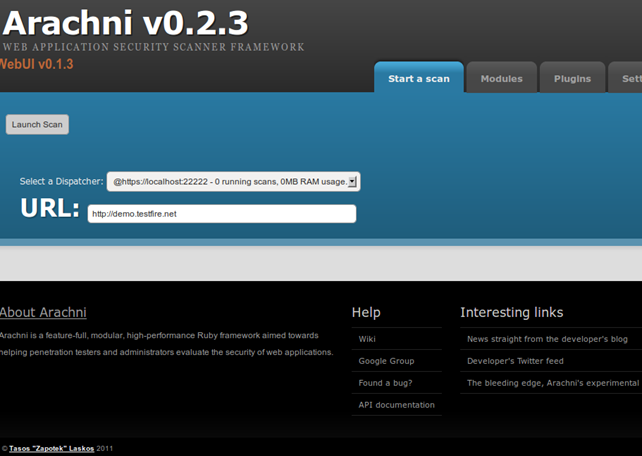

The welcome screen.

Starting a scan.

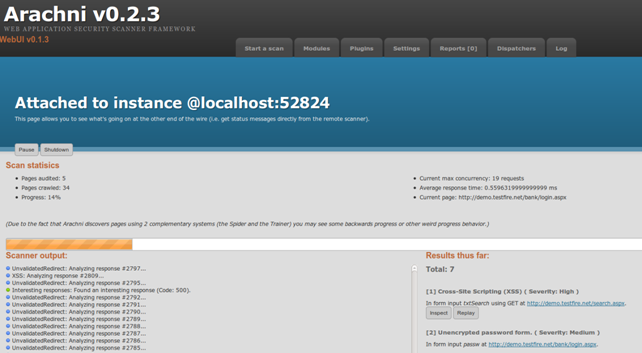

Monitoring the scan progress.

Monitoring the scan progress.

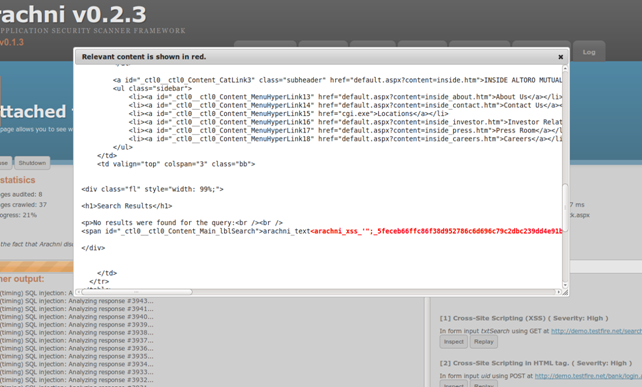

Inspecting an XSS vulnerability in real-time.

Monitoring multiple running scans.

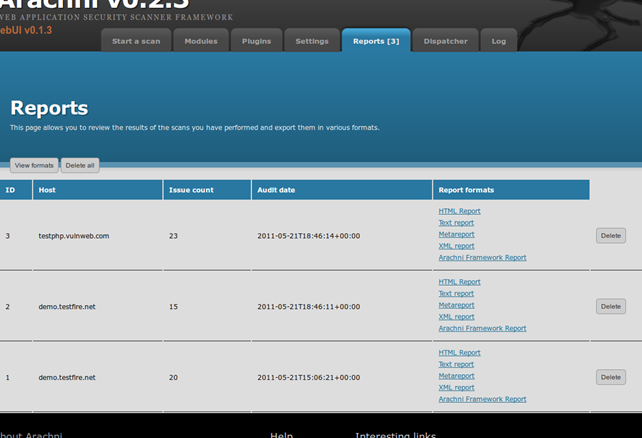

Managing scan reports.

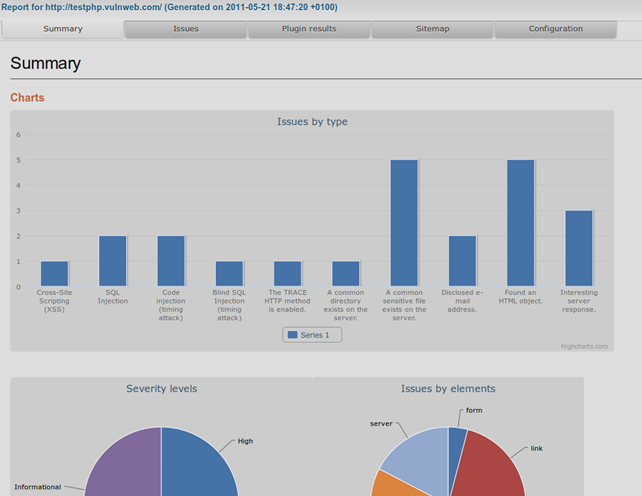

Executive summary of the HTML report.

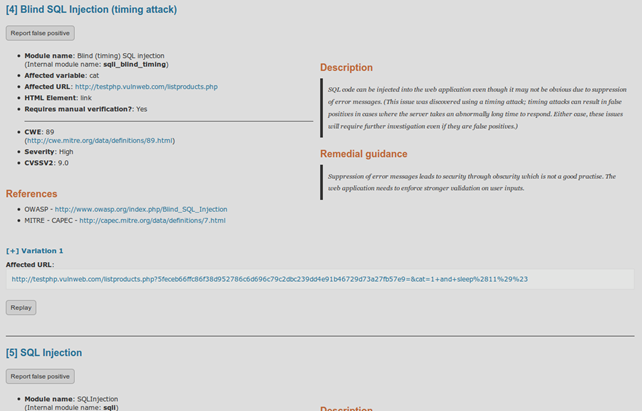

Inspecting the detected issues.

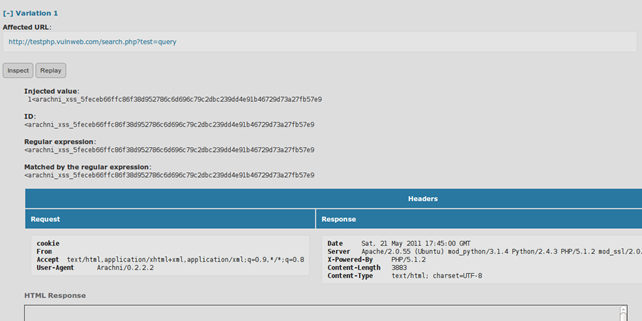

Inspecting a variation of an XSS vulnerability.

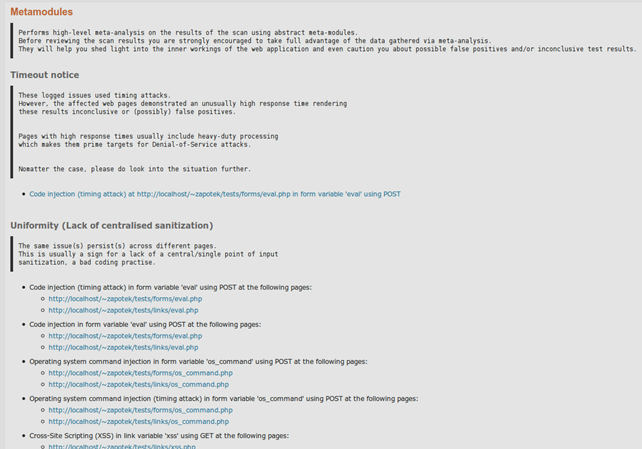

Meta-analysis results.

Caveats

Accidental DoS

I've mentioned multiple times the term "high-performance", however no good deed goes unpunished.

Due to that fact, Arachni can put a big stress on the scanned web/DB server and there have been reported cases of web servers dying and DB servers crashing due to too many open connections.

Besides the obvious problem this creates for the sys admins, it can also distort the scan results.

To keep things under control Arachni uses 2 methods:

- Manual limiting

- Auto-throttling

The default number of concurrent requests is 20, this number can be adjusted using the '--http-req-limit' parameter. This is the manual limiting.

Auto-throttling comes into play when response times are either too high or too low.

Too low response times mean that there's room for a higher number of concurrent requests while high response times mean high server stress, in which case the concurrent requests limit is automatically lowered.

This helps to keep servers alive and network conditions steady.

XMLRPC authentication

SSL is used to provide entity authentication and data confidentiality (encryption) services, which means key management.

By default, all XMLRPC communications are performed without peer verification.

If you want to control who is allowed to connect to the Dispatcher or the WebUI you need to configure the relevant keys and certificates.

11 courses, 8+ hours of training

Sources

- Homepage: http://arachni.segfault.gr

- Blog: http://trainofthought.segfault.gr/category/projects/arachni/

- Github page: http://github.com/zapotek/arachni

- Documentation: http://github.com/Zapotek/arachni/wiki

- Code Documentation: http://zapotek.github.com/arachni/

- Google Group: http://groups.google.com/group/arachni

- Author: Tasos "Zapotek" Laskos

- Twitter: http://twitter.com/Zap0tek Copyright: 2010-2011

- License: GNU General Public License v2