Introduction to Kubernetes security

Kubernetes is the most popular container orchestration platform. It is offered as a service by every major cloud service provider. Google Cloud, AWS and Azure have the GKE, EKS and AKS Kubernetes services.

With all the attention around Kubernetes, its security is an obvious area that has attracted attention.

Learn Container Security

The need for Kubernetes

Docker provides a way to containerize applications and run them. Let us assume that an organization has an application with 25 microservices, each requiring one docker container. If we choose to use Docker CLI, we will have to manually run docker and run the command multiple times to bring up all the microservices.

One way to solve this problem is to use docker-compose. Let us assume that five of the microservices are required to run on a host with GPU capacity. When there is a demand, we may also need to run five additional copies of the entire application automatically. Similarly, when there is less demand, we may want to remove some instances of the application. To solve all these complex problems, we will need a framework.

Kubernetes does exactly that. It is a container orchestration framework that can perform auto-scaling of deployed containers, decide the best node to deploy a container, support multiple container runtimes and more. In simple words, Docker allows us to run containers by acting as a container runtime, whereas Kubernetes helps us to manage these containers.

Overview of Kubernetes architecture

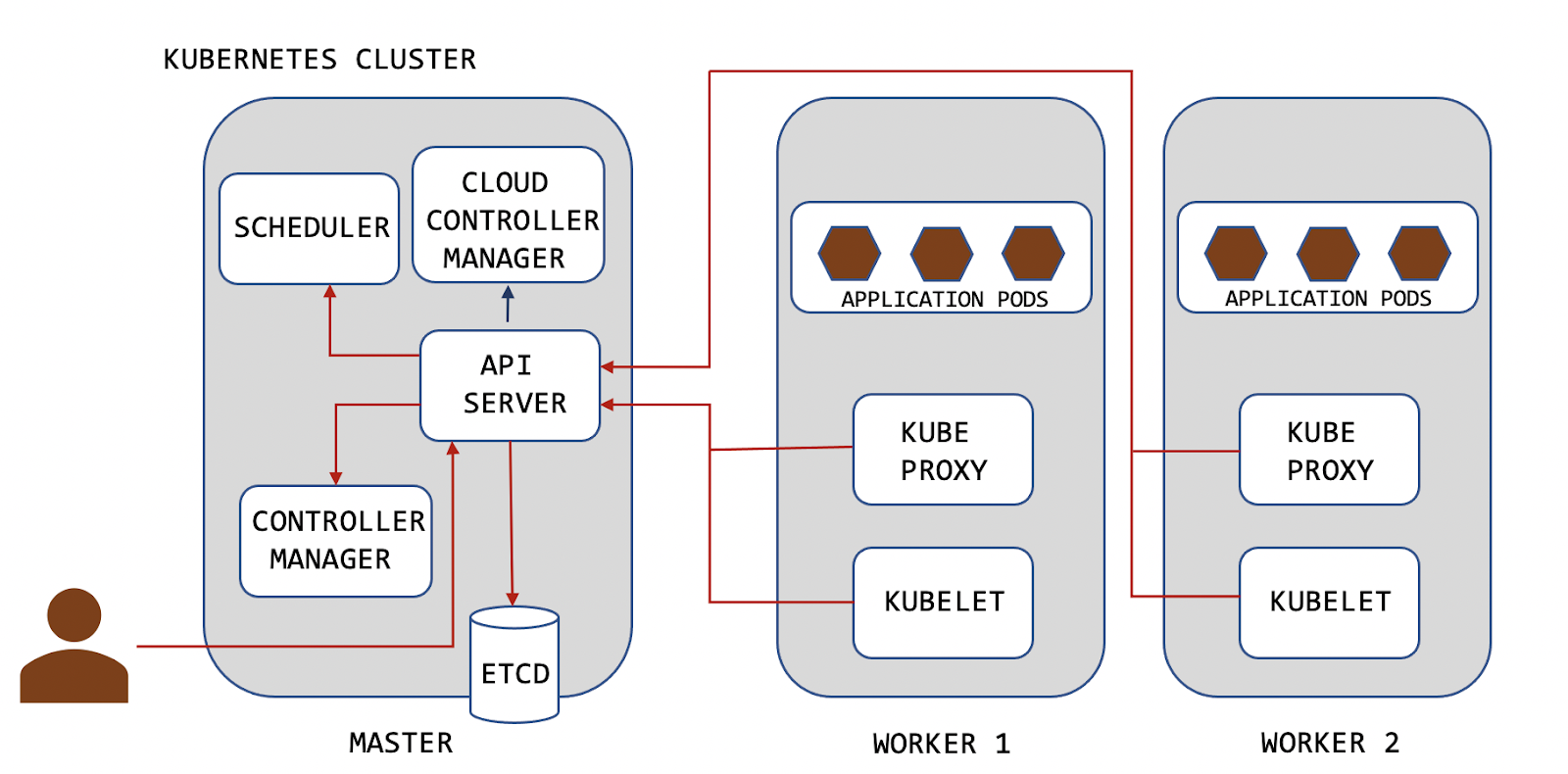

A Kubernetes cluster consists of one or more master nodes and zero or more worker nodes. Even though a cluster with a single master node is enough to run workloads, any production-grade cluster will have more than one worker node to run the containerized applications, providing fault tolerance and high availability. The master node is also known as the control plane and it manages the worker nodes and the pods in the cluster.

The following figure shows a sample Kubernetes cluster with one master node and two worker nodes.

While the worker nodes host the pods that are the components of the application workload, the control plane manages the worker nodes and the pods in the cluster.

The control plane consists of several key components that are responsible for making global decisions about the cluster, deciding which node is the right fit for an application pod for example. In addition to it, these control plane components also respond to cluster events such as creating a new pod when a pod is crashed and not satisfying the number of pods required as per replicas field in a deployment.

As shown in the preceding figure, the following are the key control plane components:

- API server

- Scheduler

- Controller manager

- Etcd

- Cloud controller manager

Following are the key worker node components:

- Kubelet

- Kube-proxy

- Container runtime

Let us begin by discussing the control plane components that run on the master node.

API server

The API server is a component of the Kubernetes control plane that exposes the Kubernetes API. The API server is the front end for the Kubernetes control plane. All requests going to other components on the master node must go through the API server and it is not possible to directly interact with the individual components. For instance, when a new pod is created in the cluster, the etcd database must be updated of the change. The communication to etcd database will only happen via the API server. When an administrator types any kubectl commands, they are interacting with the API server by sending REST requests.

The following kubectl command with the option --v=6 shows the HTTP request made to the API server. Note that the cluster here is set up using a tool called kind.

$ kubectl get nodes --v=6

I0408 18:45:35.635309 124845 loader.go:379] Config loaded from file: /home/k8s/.kube/config

I0408 18:45:35.657751 124845 round_trippers.go:445] GET

https://127.0.0.1:44875/api/v1/nodes?limit=500 200 OK in 9 milliseconds

NAME STATUS ROLES AGE VERSION

kind-control-plane Ready control-plane,master 10h v1.20.2

kind-worker Ready <none> 10h v1.20.2

$

As highlighted, the control plane is available at https://127.0.0.1:44875 in this case.

In addition to it, the API server is responsible for authenticating and authorizing the client requests before processing them.

Scheduler

According to the official Kubernetes documentation, “scheduler is a control plane component that watches for newly created pods with no assigned node, and selects a node for them to run on.”

Let us understand how the scheduler works using a simple example. When a pod creation request is made by a client, the request will be received by the API server and it will forward it to the scheduler to assign the right node for the pod to be deployed. Let us assume there are multiple nodes in the cluster and the pod to be created requires a minimum of 4 GB of RAM. The scheduler will find an appropriate node and respond to the API server. The API server will then forward the request to kubelet on the assigned node.

According to Kubernetes documentation, the following are some of the factors taken into account for scheduling decisions:

- Individual and collective resource requirements

- Hardware/software/policy constraints

- Affinity and anti-affinity specifications

- Data locality

- Inter-workload interference

- Deadlines

Controller manager

Controller manager is a control plane component that runs controller processes. Several controllers run a Kubernetes cluster. The job of these controllers is to watch the state of the cluster through the API server and make appropriate changes to the cluster to move the current state to the desired state.

As an example, let us assume we deployed an application with the desired state of three replicas for each pod in the application. If one of the pods goes down, the ReplicationController will notice and respond to this event. A ReplicationController ensures that a specified number of pod replicas are running at any one time. In other words, a ReplicationController makes sure that a pod or a homogeneous set of pods is always up and available. Even though each controller is a separate process, they are all compiled into a single binary and run in a single process.

Examples of controllers that are shipped with Kubernetes:

- ReplicationController for pod replicas

- Node controller for noticing and responding when nodes go down.

- Service accounts controller for default accounts and API access tokens for new namespaces

Etcd

Etcd is a consistent and highly available key-value store used as Kubernetes' backing store for all cluster data. All the cluster-related information such as running nodes, running pods, expected state of the cluster is stored in etcd datastore. Any new object created in a Kubernetes cluster will make it to the etcd store as a new entry.

It should be noted that the only component that can directly talk to etcd datastore is the API server. It is possible to have more than one etcd server running on different servers and load balancing for high availability.

Cloud controller manager

Cloud controller manager is a Kubernetes control plane component that embeds cloud-specific control logic. The cloud controller manager component doesn't exist if the cluster is run in a non-cloud environment, and it comes into existence only if the cluster is set up in a cloud environment.

The purpose of the cloud controller manager is to link our cluster into the cloud provider's API and to separate the components that interact with the cloud platform from the components that only interact with our cluster.

Following is an overview of the worker node components.

Kubelet

The job of Kubelet is to manage the containers created in a Kubernetes cluster. It runs on every node that’s part of a Kubernetes cluster and ensures that the containers are running in a pod and they are healthy.

Kube-proxy

Kube-proxy runs on every single node of a Kubernetes cluster and it is one of the key components. It is responsible for forwarding traffic to pods from inside and outside the cluster.

Container runtime

The actual application workloads are packaged as images and run as containers. Container runtime is software that is responsible for running containers. Docker is a popular container runtime used in Kubernetes clusters. However, Kubernetes supports several other container runtimes, which include containerd, CRI-O and any implementation of the Kubernetes Container Runtime Interface (CRI).

Learn Container Security

Understanding Kubernetes

Understanding Kubernetes architecture and components are important so you can utilize them properly.

Sources

Kubernetes components, Kubernetes

Etcd API design principles, ETCD

ReplicationController, Kubernetes