C# Multithreading Example

Introduction

The term "multithread programming" may sound complicated, but it is quite easy to do in C#.net. This article explains how multithreading works on your typical, general-purpose computer. You will learn how the operating system manages thread execution and how to manipulate the Thread class in your program to create and start managed threads. This article also renders the information you need to know when programming application with multiple threads such as Thread class, pools, threading issues and backgroundWorker.

11 courses, 8+ hours of training

Multithreading Overview

A thread is an independent stream of instructions in a program. Each written program is a paradigm of sequential programming in which they have a beginning, an end, a sequence, and a single point of execution. A thread is similar to sequential program. However, a thread itself is not a program, it can't run on its own, but runs within a program.

The real concern surrounding threads is not about a single sequential thread, but rather the use of multiple threads in a single program all running at the same time and performing different tasks. This mechanism referred as Multithreading. A thread is considered to be a lightweight process because it runs within the context of a program and takes advantage of resources allocated for that program.

Threads are important both for client and server applications. While in C# program coding, when you type something in editor, the dynamic help (intellisense) Windows immediately shows the relevant topics that fit to the code. One thread is waiting for input from the user, while other does some background processing. A third thread can store the written data in a temporary file, while another one downloads a file from a specific location.

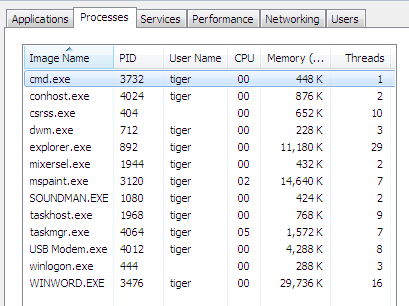

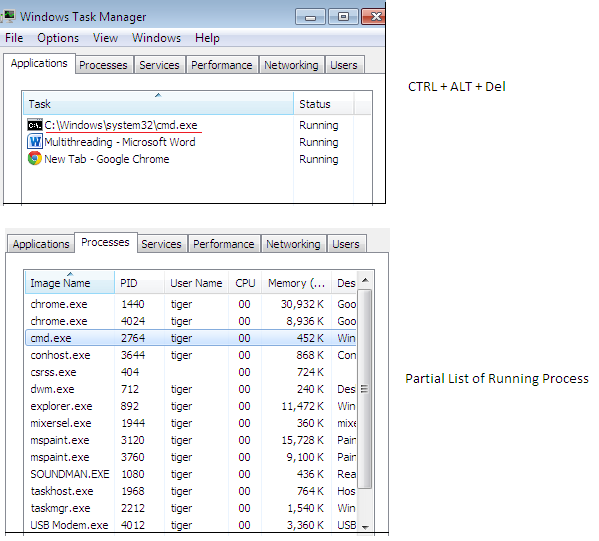

With the task manager, you can turn on the Thread column and see the processes and the number of threads for every process. Here, you can notice that only cmd.exe is running inside a single thread while all other applications use multiple threads.

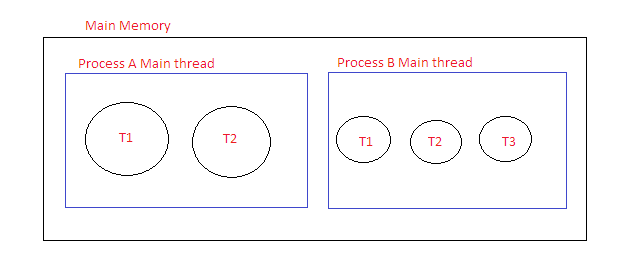

The operating system schedules threads. A thread has priority and every thread has its own stack, but the memory for the program code and heap are shared among all threads of a single process.

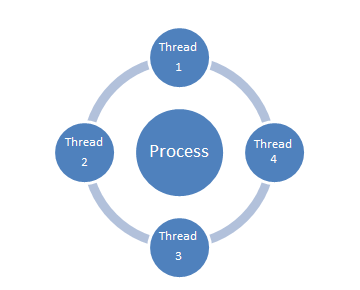

A process consists of one or more threads of execution which is simply referred as threads. A process always consists of at least one thread called as Main thread (Main() method). A single thread process contains only one thread while multithread process can contains more than one thread of execution.

On a computer, the operating system loads and starts applications. Each application or service runs as a separate process on the machine. The following image illustrates that there are quite few processes actually running than there are applications. Many of the processes are background operating system processes that are started automatically when the computer powers up.

System.Threading Namespace

Under .NET platform, the System.Threading namespace provides a number of types that enable the direct construction of multithreaded application.

System.Threading.Thread class

The Thread class allows you to create and manage the execution of managed threads in your program. They are called managed threads because you can directly manipulate each thread you create. You will found the Thread class along with useful stuffs in the System.Threading namespace.

Multithreading Implementation

The following section plays with the numerous System.Threading namespace static and instance-level members and properties.

Obtaining Current Thread Information's

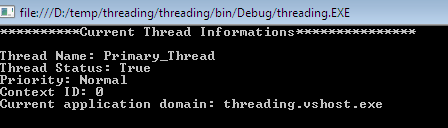

To illustrate the basic use of Thread type, suppose you have console application in which CurrentThread property retrieves a Thread object that represents the currently executing thread.

[c language="sharp"]

using System;

namespace threading

{

class Program

{

static void Main(string[] args)

{

Console.WriteLine("**********Current Thread Informations***************n");

Thread t = Thread.CurrentThread;

Console.WriteLine("Thread Name: {0}", t.Name);

Console.WriteLine("Thread Status: {0}", t.IsAlive);

Console.WriteLine("Priority: {0}", t.Priority);

Console.WriteLine("Context ID: {0}", Thread.CurrentContext.ContextID);

Console.ReadKey();

}

}

}

After compiling this application, the output would be as following;

Simple Thread Creation

The following simple example explains the Thread class implementation in which the constructor of Thread class accepts a delegate parameter. After the Thread class object is created, you can start the thread with the Start() method as following;

[c language="sharp"]

using System;

namespace threading

{

class Program

{

static void Main(string[] args)

{

Thread t = new Thread(myFun);

Console.WriteLine("Main thread Running");

Console.ReadKey();

static void myFun()

{

Console.WriteLine("Running other Thread");

}

}

}

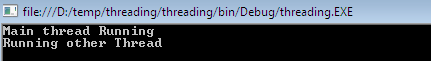

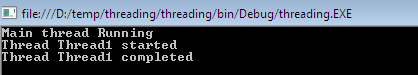

After running the application, you got the following output of the two threads as:

The important point to be noted here is that, there is no guarantee what output come first meaning, which thread start first. Threads are scheduled by the operating system. So which thread comes first can be different each time.

Background Thread

The process of the application keeps running as long as at least one foreground thread is running. If more than one foreground thread is running and the Main() method ends, the process of the application keeps active until all foreground threads finish their work.

When you create a thread with the Thread class, you can define if it should be a foreground or background thread by setting the property IsBackground. The Main() method set this property of the thread t to false. After setting the new thread, the main thread just writes to the console an end message. The new thread writes a start and an end message, and in between it sleep for two seconds.

[c language="sharp"]

using System;

namespace threading

{

class Program

{

static void Main(string[] args)

{

Thread t = new Thread(myFun);

t.Name = "Thread1";

t.IsBackground = false;

t.Start();

Console.WriteLine("Main thread Running");

Console.ReadKey();

static void myFun()

{

Console.WriteLine("Thread {0} started", Thread.CurrentThread.Name);

Thread.Sleep(2000);

Console.WriteLine("Thread {0} completed", Thread.CurrentThread.Name);

}

}

}

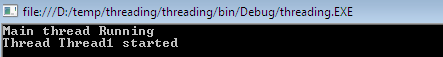

When you compile this application, you will still see the completion message written to the console because the new thread is a foreground thread. Here, the output as following;

If you change the IsBackground property to start the new thread to true, the result shown at the console is different as follows:

Concurrency issues

Programming with multiple threads is not an easy task. When starting multiple threads that access the same data, you can get intermediate problems that are hard to resolve. When you build multithreaded applications, you program needs to ensure that any piece of shared data is protected against the possibility of numerous threads changing its value.

Race Condition

A race condition can occurs if two or more threads access the same objects and access to the shared state is not synchronized. To illustrate the problem of Race condition, let's build a console application. This application uses the Test class to print 10 numbers by pause the current thread for a random number of times.

[c language="sharp"]

Using System;

namespace threading

{

public class Test

{

public void Calculation()

{

for (int i = 0; i < 10; i++)

{

Thread.Sleep(new Random().Next(5));

Console.Write(" {0},", i);

}

Console.WriteLine();

}

}

class Program

{

static void Main(string[] args)

{

Test t = new Test();

for (int i = 0; i < 5; i++)

{

tr[i] = new Thread(new ThreadStart(t.Calculation));

tr[i].Name = String.Format("Working Thread: {0}", i);

//Start each thread

foreach (Thread x in tr)

{

x.Start();

}

Console.ReadKey();

}

}

}

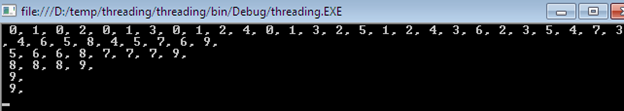

After compiling this program, the primary thread within this application domain begins by producing five secondary threads. Each working threads told to call the Calculate method on the same Test class instance. So you have taken none of precaution to lock down this object's shared resources. Hence, all of five threads start to access the Calculation method simultaneously. This is the Race Condition and the application produce unpredictable output as following;

Deadlocks

Having too much locking into an application can get your application into trouble. In a deadlock, at least two threads wait for each other to release a lock. As both threads wait for each other, a deadlock situation occurs and thread wait endlessly and your computer eventually hanged.

Here, the both of methods changed the state of the two objects obj1 and obj2 by locking them. The methods DeadLock1() first lock obj1 and next for obj2. The method DeadLock2() first lock obj2 and then obj1.So lock for obj1 is resolved next thread switch occurs and second method start to run and gets the lock for obj2. The second thread now waits for the lock of obj1. Both of threads now wait and don't release each other. This is typically deadlock.

[c language="sharp"]

using System;

namespace threading

{

class Program

{

static object obj1 = new object();

public static void DeadLock1()

{

lock (obj1)

{

Console.WriteLine("Thread 1 got locked");

Thread.Sleep(500);

lock (obj2)

{

Console.WriteLine("Thread 2 got locked");

}

}

public static void DeadLock2()

{

lock (obj2)

{

Console.WriteLine("Thread 2 got locked");

Thread.Sleep(500);

lock (obj1)

{

Console.WriteLine("Thread 1 got locked");

}

}

static void Main(string[] args)

{

Thread t1 = new Thread(new ThreadStart(DeadLock1));

t1.Start();

t2.Start();

Console.ReadKey();

}

}

}

Synchronization

Problems that can happen with multiple threads such as Race condition and deadlocks can be avoided by Synchronization. It is always suggested to avoid concurrency

issues by not sharing data between threads. Of course, this is not always possible. If data sharing is necessary, you must use synchronization so that only one thread at a time accesses and changes shared states. This section discusses various synchronization technologies.

Locks

We can synchronize access of shared resources using the lock keyword. By doing so, incoming threads cannot interrupt the current thread, preventing it from finishing its work. The lock keyword required an object reference.

By taking the previous Race Condition problem, we can refine this program by implementing lock on crucial statements to make it foolproof from race conditions as following;

[c language="sharp"]

public class Test

{

public void Calculation()

{

lock (tLock)

{

for (int i = 0; i < 10; i++)

{

Thread.Sleep(new Random().Next(5));

Console.Write(" {0},", i);

}

Console.WriteLine();

}

}

}

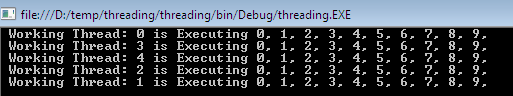

After compiling this program, this time it produced the desired result as follows. Here, each thread has sufficed opportunity to finish its tasks.

Monitor

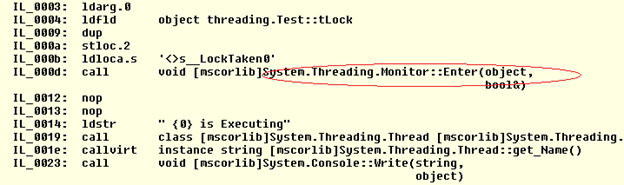

The lock statement is resolved by the compiler to the use of the Monitor class. The Monitor class is almost similar to locks but has a big advantage compared to the lock statements in terms of control. You are able to instruct the active thread to wait for some duration time and inform waiting threads when the current thread is completed. Once processed by C# compiler, a lock scope resolves to the following code.

[c language="sharp"]

object tLock = new object();

public void Calculation()

{

Monitor.Enter(tLock);

try

{

for (int i = 0; i < 10; i++)

{

Thread.Sleep(new Random().Next(5));

Console.Write(" {0},", i);

}

}

catch{}

finally

{

Monitor.Exit(tLock);

}

Console.WriteLine();

}

If you see the IL code of the Lock application using ILDASM, you will found the Monitor class reference over there as follows:

Using [Synchronization] Attribute

The [Synchronization] attribute is a member of System.Runtime.Remoting.Context namespace. This class level attribute effectively locks down all instance of the object for thread safety

[c language="sharp"]

object tLock = new object();

public void Calculation()

{

Monitor.Enter(tLock);

try

{

for (int i = 0; i < 10; i++)

{

Thread.Sleep(new Random().Next(5));

Console.Write(" {0},", i);

}

}

catch{}

finally

{

Monitor.Exit(tLock);

}

Console.WriteLine();

}

Mutex

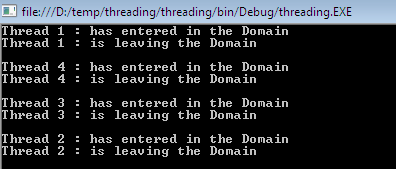

Mutex stand for Mutual Exclusion is method that offers synchronization across multiple threads. The Mutex calss derive from WaitHandle, you can do a WaitOne() to acquire the mutex lock and be the owner of the mutex that time. The mutex is released by invoking the ReleaseMutex() method as following;

[c language="sharp"]

using System;

namespace threading

{

class Program

{

static void Main(string[] args)

{

for (int i = 0; i < 4; i++)

{

Thread t = new Thread(new ThreadStart(MutexDemo));

t.Name = string.Format("Thread {0} :", i+1);

t.Start();

}

Console.ReadKey();

static void MutexDemo()

{

try

{

mutex.WaitOne(); // Wait until it is safe to enter.

Console.WriteLine("{0} has entered in the Domain",

Thread.Sleep(1000); // Wait until it is safe to enter.

Console.WriteLine("{0} is leaving the Domainrn",

}

finally

{

mutex.ReleaseMutex();

}

}

}

}

Once you successfully compile this program, it shows up that each newly created first entered into its application domain. Once, it finished its tasks then it released and second thread started and so on.

Semaphore

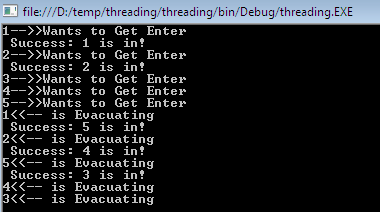

A semaphore is very similar to Mutex but semaphore can be used by multiple threads at once while Mutex can't. With a Semaphore, you can define a count how many threads are allowed to access the resources shielded by semaphore simultaneously.

Here in the following example, five threads are created and two semaphore. In the constructor of semaphore class, you can define no of locks that can be acquired with a semaphore.

[c language="sharp"]

using System;

namespace threading

{

class Program

{

static void Main(string[] args)

{

for (int i = 1; i <= 5; i++)

{

new Thread(SempStart).Start(i);

Console.ReadKey();

}

static void SempStart(object id)

{

Console.WriteLine(id + "-->>Wants to Get Enter");

try

{

obj.WaitOne();

Thread.Sleep(2000);

Console.WriteLine(id + "<<-- is Evacuating");

}

finally

{

}

}

}

}

While we run this application, two semaphores are immediately created and rest of wait because we have create five thread. So three are in waiting state. The movement, any one of thread released the rest of created one by one as following.

Summary

This article explained how to code applications that utilize multiple threads using the System.Threading Namespace. Using multithreading in your application can cause concurrency issues such as Race condition and deadlocks. Finally, this article discusses the various ways of synchronization such as Locks, Mutex, and Semaphore to handle concurrency problems in which you can protect thread sensitive block of code to ensure that shared resources do not become unusual.